CTG: How Diffusion Models Are Enhancing Controllable Text Generation (9/7)

When it comes to Controllable Text Generation (CTG), where we want to control aspects like tone, sentiment, and structure, there’s a vast array of methods out there. But have you ever thought about using diffusion models, which are commonly used in image generation, for CTG? That’s where Diffusion-LM comes into the picture!

Diffusion-LM is designed to apply diffusion techniques to text generation, offering more fine-grained control over the text without relying on external classifiers like the plug-and-play models. Here’s a quick look at what sets it apart:

Diffusion-LM: How It Works

- Embedding Text in Continuous Space: While text data is discrete by nature, Diffusion-LM transforms it into a continuous space. This allows the model to take advantage of the iterative denoising process typical in diffusion models (commonly used for images). The model gradually refines noisy text into a coherent output, making it easier to control features like tone and sentiment during this process.

- Intrinsic Control During Generation: Unlike plug-and-play methods that attempt to adjust text after it’s already been generated, Diffusion-LM integrates the control mechanisms into the generation process. This leads to more seamless and natural control over the output, as the text is generated with those attributes in mind from the beginning. So, if you want to control how formal or casual the tone is, the model adjusts this as it generates the text rather than retrofitting it afterward.

- Fine-Tuning for Conditional Learning: If you want your Diffusion-LM model to perform specific CTG tasks, like adjusting the sentiment or style, fine-tuning is still needed. It helps optimize the model for more complex conditions and enables it to align better with the attributes you’re focusing on. Without this, you might need significantly more data to get the same level of control(ar5iv)(ar5iv).

Plug-and-Play vs. Diffusion: What’s the Difference?

The key difference between plug-and-play methods and Diffusion-LM is where and how the control is applied. Plug-and-play models rely on a pre-trained language model and use external classifiers to tweak the output. This means you generate the text first and then try to mold it afterward, which can lead to inconsistent or awkward results.

In contrast, Diffusion-LM integrates control directly into the generation process, which means the text is created with those attributes already considered. This makes the output more natural and aligned with the desired tone, sentiment, or structure from the get-go.

Need the Right Data for CTG? Check Out CUBIC’s Azoo Platform!

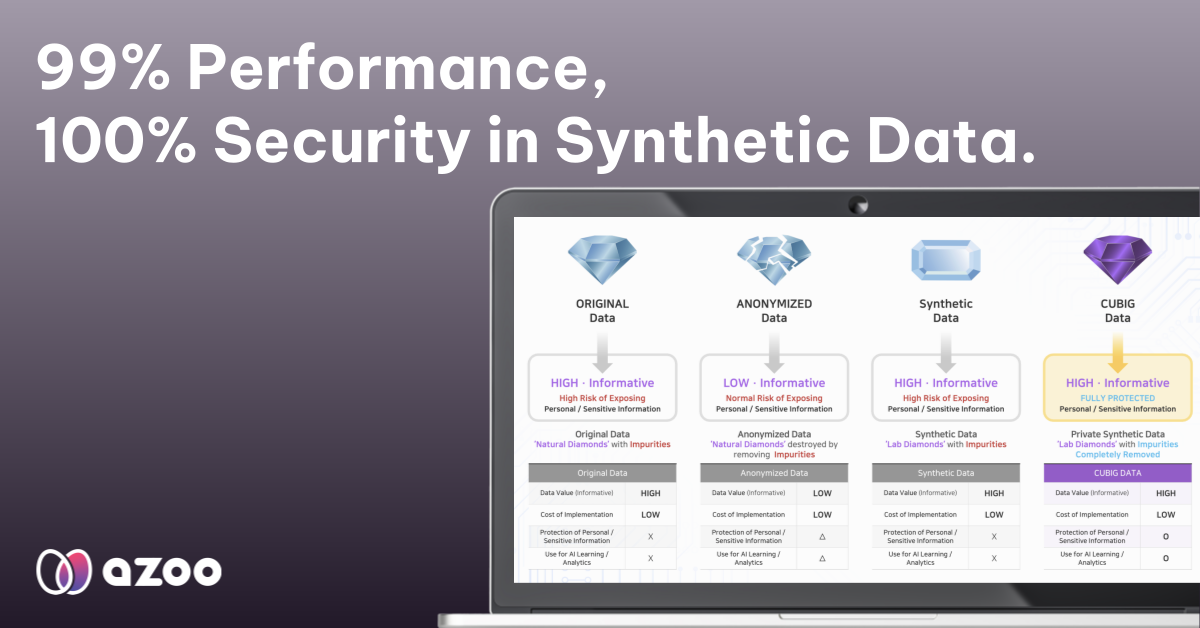

When you’re working on CTG, having diverse datasets rich in tone, sentiment, and structure is crucial to training your models. But what about the privacy concerns that come with sharing sensitive data? That’s where CUBIC’s Azoo Data Platform can help!

With Azoo and DTS (Data Transformation System), you can securely share and access datasets, ensuring your data remains protected while you train or fine-tune your models. So, whether you’re experimenting with Diffusion-LM or any other model, Azoo has got you covered. Why not take a peek at how Azoo can help make your CTG projects secure and efficient?

Diffusion-LM might just offer you the perfect tool for better CTG, and CUBIC’s Azoo ensures that you can do it all with peace of mind, knowing your data is secure!

Reference