Evaluating Data Diversity Through Topological Coverage: The Key to Robust and Fair AI Models

Data diversity isn’t just a checkbox in modern machine learning. It’s the foundation for building models that generalize well, remain unbiased, and perform reliably in real-world scenarios. Without diverse and representative datasets, even the most advanced algorithms will struggle with bias, underrepresentation, and generalization failures. But how do we measure “diversity” in a way that truly captures these aspects? One rigorous method involves using Topological Data Analysis (TDA), particularly focusing on coverage, to assess how well a dataset spans the space of possible inputs. This post breaks down the math behind this approach and discusses its implications across various industries.

Understanding Coverage and TDA

TDA provides insights into the underlying geometric structure of data. In this context, coverage refers to how well the data represents or “covers” the underlying manifold or space where the data points exist. When the dataset effectively spans various regions of this space, we consider it diverse. However, if data points are concentrated in specific areas, leaving large sections unexplored, diversity suffers.

Mathematically, this can be framed using Vietoris-Rips complexes, persistent homology, and Morse theory. These tools help visualize how well the dataset spans the input space. For example, persistent homology tracks relationships between data points at varying scales, revealing gaps or underrepresented regions in the dataset.

In simple terms, coverage measures how thoroughly a dataset explores the problem space. If there are large “gaps” in coverage, it indicates that certain aspects of the problem are not fully represented.

A Practical Example: Visualizing Data Diversity with TDA

Consider a dataset representing a robot’s movements in a 3D space. A diverse dataset should capture the robot’s navigation across the entire area, including corners and obstacles. Using TDA, we can construct a simplicial complex to represent the robot’s path and, through persistent homology, evaluate how well this path “covers” the space. If there are gaps (e.g., parts of the room the robot rarely visits), TDA can help identify these underrepresented regions, highlighting areas where diversity is lacking.

Why Coverage Matters for Data Diversity

Traditional diversity metrics often rely on simple statistical measures like variance or entropy, which can overlook deeper structural relationships in the data. In contrast, coverage provides a more nuanced metric by evaluating how well the dataset spans the entire space. It goes beyond individual data points to assess whether the dataset captures the full dynamics of the problem space.

For example, in image classification, high coverage would mean the dataset spans various lighting conditions, angles, and object variations. Low coverage suggests that the dataset focuses only on narrow scenarios, leading to poor performance when exposed to new, unseen conditions.

Real-World Applications

Data diversity has real-world implications across several domains:

- Healthcare: In medical image analysis, diverse datasets ensure representation across different patient demographics and conditions, reducing bias and improving model accuracy for all populations.

- Autonomous Vehicles: Training autonomous systems requires datasets that cover a variety of environments—weather conditions, terrains, and lighting. Without proper coverage, these systems risk underperforming in real-world scenarios.

- Natural Language Processing (NLP): For multilingual models, coverage ensures representation of different dialects, sentence structures, and linguistic patterns, leading to better generalization across languages.

- Finance: In algorithmic trading, datasets must cover a wide range of market conditions, from bull markets to financial crises. Insufficient coverage can result in strategies that fail under rare but critical conditions.

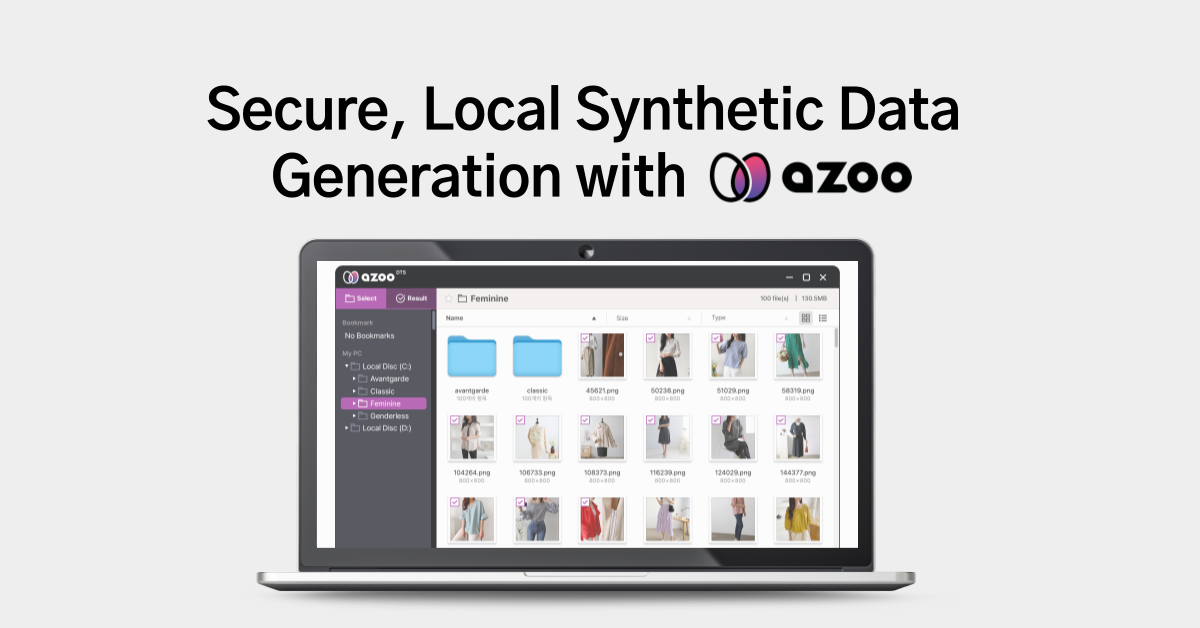

Data Diversity and CUBIG

At CUBIG, we take data diversity seriously, continuously developing synthetic data generation techniques to ensure that datasets meet the highest standards of diversity.

Our data never leaves your local environment, yet still adheres to differential privacy (DP) standards, guaranteeing both security and diversity. If you’re interested in how we maintain these standards while keeping original data on the user’s local machine, explore CUBIG’s DTS (Data Transformation System)-a unique, secure system that ensures data variety while meeting stringent privacy requirements.

For more on our solutions, you can check out Azoo, where we push the boundaries of secure and diverse data generation.